Spec-driven development with Kiro

My new expert paired programming partner?

On July 14, AWS launched a new “AI IDE” named Kiro. The promise is to not just “vibe code” in an editor, but to actually have your AI partner work alongside you to build your application that is based on a set of use case based requirements, detailed designs and specific tasks using the Easy Approach to Requirements Format (EARS).

With Cursor, GitHub Copliot Agents, Claude Code, do we need another AI programming tool? Are we not already able to engage AI effectively with vibe coding and these other agent interfaces? Can spec-driven development overcome some challenges of vibe coding? Can this be my “expert” companion as if I was back to pair programming with a trusted partner by my side? Let’s explore some of these questions based on my hands on experience with Kiro.

tl;dr - Kiro feels different. I’ve lightly used AI tools for programming, but Kiro gets me. The extra work to develop specs and steering docs pays off by keeping your AI buddy on task without backtracking on work that you and AI had previously built.

Setup Experience

So, let’s dive into what this experience looked like. Similar to many other AI IDEs, Kiro is based on Code OSS and can import your existing VS Code settings. AWS says they needed a separate application since the plug-in architecture in VS Code limited some of the features they wanted to provide - more on that later.

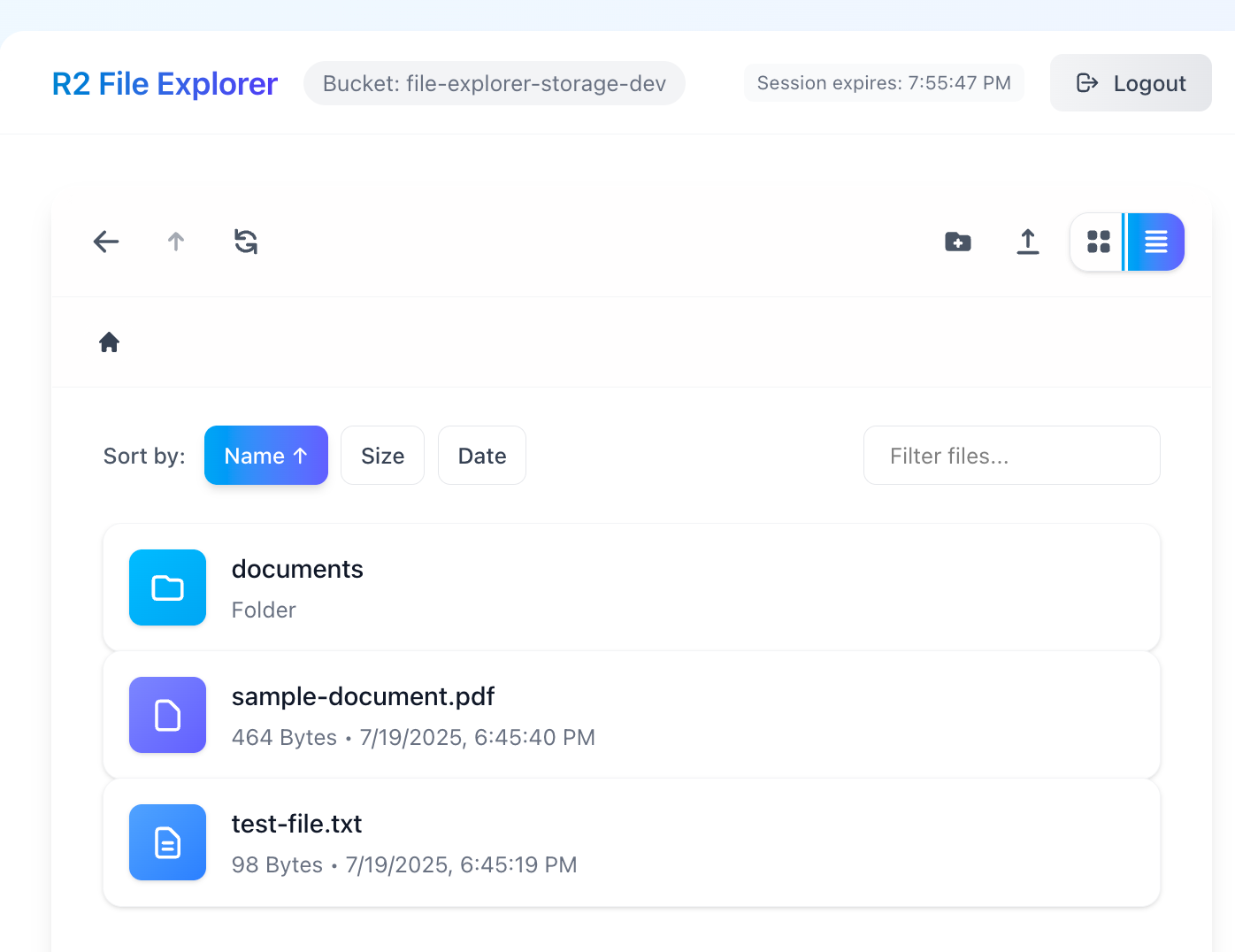

I download Kiro, drug into my Applications folder and fired up a blank project. One way to get started is to load up an existing codebase and work with Kiro to build out specs based on established code. However, I wanted to explore using Kiro with a brand new project and focused on a non-AWS based application to make sure it wasn’t just tuned to steer you into the AWS ecosystem. As a Cloudflare R2 customer (R2 hosts all the assets on my PNW.Zone Mastodon server), I decided to build a Cloudflare R2 file explorer. The entire project and commit history of our progress is available on GitHub.

Starting the Build

My first step was to prompt Kiro with what I wanted to build:

Let's build a spec for a new app. I'd like a web application that will provide a "File Explorer" like experience for my Cloudflare R2 bucket.

The Kiro agent went to work and gave me a spec and then asked if I wanted to tweak anything. Once I approved it moved on to building the design. I should note that before I started this work I configured two Cloudflare MCP servers to allow Kiro to dive deeper into the direct knowledge of Cloudflare. The first MCP Server, which Kiro used within seconds after I provided the above prompt, was the Cloudflare Documentation MCP Server. The second was the Cloudflare Workers Bindings MCP Server. This allowed me to ask questions about my actual Cloudflare resources as well as take action on them (if I wanted to go there) - all protected via a OAuth login to Cloudflare.

Some ways I iterated on the design was to ask Kiro to use Cloudflare workers on the Rust based backend. I wanted the app to use Cloudflare OAuth, but after a bit of iterating, doc searching, etc, we determined the Cloudflare’s OAuth feature was for access to your internal resources and not for third-party applications, so we pivoted back to API-tokens. I then let Kiro move onto building out the detailed tasks for the app, but later iterated and asked to update the design to add observability. This honestly was a mistake and I later removed observability while debugging the app. My advise would be to start small and build up functionality as features start to work. Goodness, just like you were developing an app with your team! If let loose, Kiro will tend to over engineer the implementation, but can be rained in with proper direction.

I should note that Kiro is in preview and at this period it is free to use. But you will run into errors like this (so be patient).

The model you've selected is experiencing a high volume of traffic. Try changing the model and re-running your prompt.

Switching from Claude Sonnet 4.0 down to 3.7 often got past this error, but after a few hours of continuous work, I would see:

You've reached your daily usage limit. Please return tomorrow to continue building.

The daily limits would give me 2-3 hours of interactions which got them through elimination of a few tasks per day. So at the time of writing this, I’ve got the basic login, file listing and file download features working within the app. AWS just announced their new pricing model and that waitlist invites would be sent out soon.

The Parts of Kiro

But let’s back up and talk about some of the key elements of the Kiro development environment. When you select the Kiro ghost icon in the Activity Bar, there are 4 key Kiro settings: Specs, Agent Hooks, Agent Steering, MCP Servers.

Specs

Each spec if broken into three distinct parts - Requirements, Design & Task List. You work with Kiro to progressively move from use case based designs to specific implementation tasks.

At first, Kiro created one spec for the entire app. However, as we iterated and made improvements it started to break out functionality into smaller specs. I learned this was the best way to focus it on exactly what you want implemented or fixed. I then started to create “sub-specs” (my terminology) for things like a deep-dive on to enhance the security posture of the app.

Agent Hooks

One of the advantages of being distributed as a fork of Code OSS was that AWS could implement deep hooks to do things like watch for file changes and trigger the agent to take action. Towards the second half of my development, I experimented with this hook that would automatically generate a Git commit message and push changes that were built whenever a task was completed.

Agent Steering

Whenever you start a session with Kiro it will first read all the Agent Steering docs. You can think of these as giving Kiro all the necessary background on how you want it to approach the implementation. Everything from a high-level overview of the entire app down to specific toolchain and coding standards. These are those team standards docs that are written, but not always followed. I’ll say Kiro did a decent job at keeping within the confines of the docs.

MCP Servers

Like other AI assistants, Kiro has native support for MCP servers which is what allows AI tools to have a standard way to connect with external tools. The Context7 server was a must have as it helped steer Kiro to dig into current documentation as it worked. Directions for installing are right on the Context7 repo.

How to Get Work Done

In other AI assistants, you may need to craft detailed prompts in a free-form nature to get work done. The spec-driven workflow in Kiro involves you directing it to implement tasks. This is the heart of the AI workflow. There is a nice interface to directly start the task from the markdown file where you can see the file changes that were made due to that task, or literally go back to the AI execution window to see the work that Kiro performed. The Kiro team gives Kiro the focused context that is needed to work on that task.

What did I learn?

I’m not gonna lie. I’m not a front-end developer, but Kiro helped me build a modern interface in React with Tailwind CSS with limited expertise. If you are an expert in front-end design & development, I’d like to hear how you think we did!

Lesson 1 - Specs first!: Spending time on refining the specs before writing a line of code will pay dividends. As I mentioned above, I took the large “entire app” spec and began building but then later spent a bit a time refactoring and debugging based on too much complexity being tackled right out of the gate.

Lesson 2 - Not production code!: It should be clear that this is not a tool that will build production code that can be deployed at the end of a session. I had to correct Kiro a number of times to get to a working prototype. There were incorrect implementation choices such as first selecting Cloudflare Pages for hosting the app’s static assets even though Cloudflare is now pushing customers to use Workers for hosting static assets. We first started with a Rust implementation for the backend worker service, but after running into numerous build and deployment issues, I steered us to Typescript so we’d have a common language between the frontend and backend service.

Lesson 3 - Debugging Can Work with AI: The debugging workflow was interesting because I could do things like share screenshots of what I was seeing to debug Tailwind CSS issues or paste in Chrome console logs and share backend worker logs to debug routing issues. All these pieces of information greatly helped Kiro focus on the actual issue vs. having it guess at where the bug may be located.

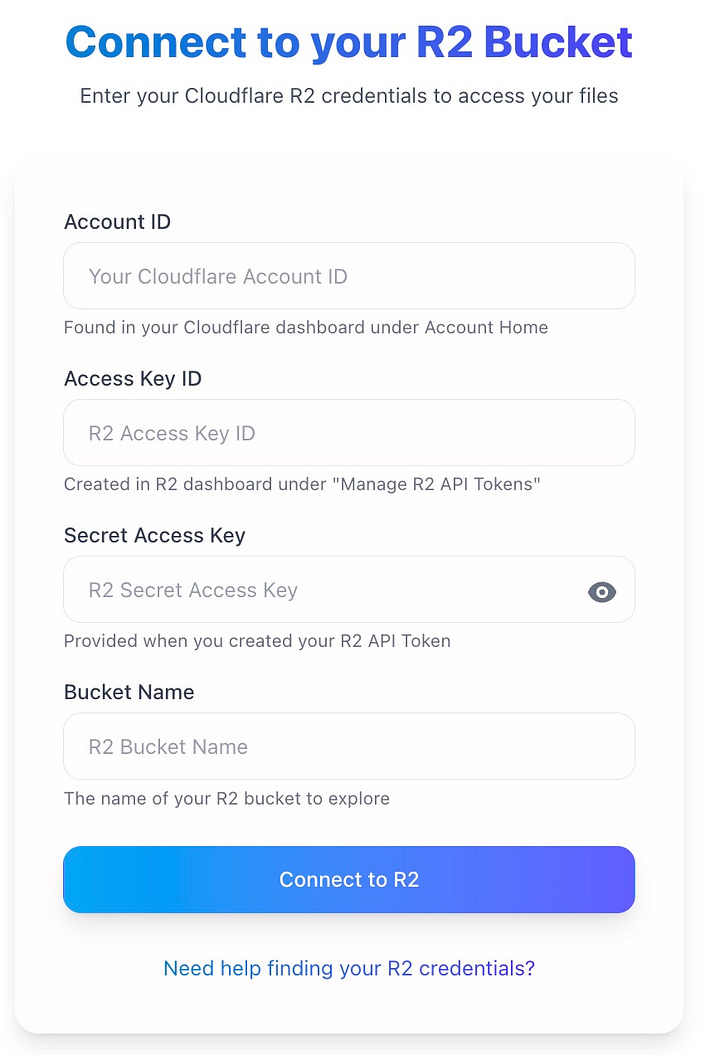

Here’s an example of the functional, but not styled login page on the left and then the correct page after we debugged the Tailwind CSS issue in this commit.

Lesson 4 - Moderate Docs: Kiro can help not just create your code, but create developer documentation in the form of designs, READMEs, guides, etc. If you let it loose, it can sometimes become overly verbose and repetitive, so at times had to explicitly direct it to tone it down and consolidate content.

Lesson 5 - We still need humans!: This experience demonstrated that if I hadn’t brought experience as a software developer it would have been impossible to correct Kiro to move in the right direction and build a functional app. Knowing what questions to ask, where to guide AI and how to validate the implementation are all still human tasks. The AI developer assistant space is moving quickly, so it will be interesting to watch how the human dependency changes over the months and years ahead.

Final Thoughts

If you’ve read this far, I hope you found some value to hearing about my experience with Kiro. Yes, I believe Kiro is currently filling a missing spot in the AI development tools market. The clear specifications that can be committed and iterated with your team will truly enable the use of AI tooling outside of a one developer team. Go give it a try and share your thoughts in the comments!

This post was written by a human without AI assistance.